Just to

remind you, during digital investigation we are supposed to gather two types of

documentation. The first one - not-technical – is taken during initial

response. The investigator is looking for any information that can be taken on/near

the crime scene. He or she is checking IT infrastructure, personnel and

circumstances. As an example, imagine

that you make a call to your support saying that your app is not working

properly. As usually they call back you the next day, informing that they need

logs, screenshots or any information presenting in what circumstances your

application was, when you faced problems. And it is too late. So, yes, the

purpose of not-technical information is to take any data presenting when the

incident happened, what was the IT infrastructure status, what personnel was

involved and so on. Believe me or not, sometimes this information is the clue.

Moving forward. On the other hand we have technical stuff, which can be divided

into NBE and HBE. I am pretty sure that the first one was well presented before

– and I will be back to it in the future – but now, I would like to focus on

the Host Based Evidence. So what does mean HBE?

This is

simply a collection of information gathered in Live incident response process.

I can say that also memory-imaging (disk imaging) is part of live response –

sure – one of its subtypes. Host based evidence is set of : logs, documents,

files, records, network connections, routing tables, list of processes,

libraries and so on. This is all that can be obtained from machine and not from

network nodes that are nearby.

There are

several ways of HBE acquisition :

a) Live Response (involves several

approaches and also data acquisition for post-mortem analysis)

b) Forensic Duplication (Digital Archeology

and Malware Investigation)

In this

article I would like to write how the Live Response(apart from the

circumstances such us intrusion detection process, malware, or simply

troubleshooting) looks like. What is more, there are several tools(commercial)

that can perform live response on remote machine without any knowledge from the

investigator. Here I want to present what information should be obtained from

the subject host, and what tool will be helpful. I would like also to remind,

that there are myriad of software that can be used, and appropriate training

should be taken by investigator. I strongly recommend reading several books for

data acquisition, computer forensics or even file-system investigation. Please,

refer to the short table of contents:

Prerequisites

Soundness (mention anti-forensics)

Good practices

OOV theory

Data Acquisition

Why Live?

Summarize

Everything

you do during investigation must be planned and follow clear methodology. You

don’t want to launch panic mode, and find

yourself somewhere between broken documentation and destroying the subject

system. On higher levels of maturity during incident response, investigator is

supposed to adjust his thinking and tools to achieve as much as it is possible.

By saying achieving, I mean obtaining ‘correct’ information and follow clues in

the process of problem detection. After reviewing all collected data or during

this process investigator will find him (or with CSIRT) in situation where

appropriate response strategy should be taken. Also if it is possible, other

information can be checked and collected. Please refer to previously posted

article. From this short paragraph, it becomes more visible that we need to find

the plan, formula that we should follow during

investigation. I would strongly recommend finding additional information

about incident response process from process modeling view, and check what I

have written previously.

When we

talk about live system and data acquisition we need to know, that everything we

do on the system is changing itself somehow.

It is very hard task – but possible – to check what alternation is made

by investigator when executing commands or launching programs. In literature we

can find “forensic soundness” statement,

and following “anti-forensic” actions. Please also check what does mean “evidence

dynamics”. Very interesting subject. Saying that, we can fluently move to OOV

theory. This abbreviation can be simply resolved into Order Of Volatility introduced

by Farmer and Venema. What it shows, is the theory, that every information is

stored in operating system in different layers or storages as on the

screenshot below. It presents how long “each information” is alive – what is the live

expectancy of the data. It must be taken into consideration when acquiring any

information from any OS.

And, please…

Never – I mean never – forget about documentation. Build your documentation

carefully, take notes, screenshots, files .etc and you will quickly notice how

beneficial it is.

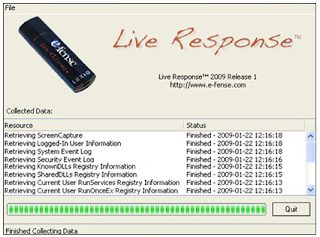

In the very

newest literature you can find, that by automatizating the process of

volatile data acquisition we can save time, avoid misunderstandings, failures

and the soft will do the job for us! During data

acquisition process itself, several things must be pointed out. Think about,

what access to the subject machine you have(is is local or remote? What privileges

do you have, or even further what are the infrastructure restrictions – what protocol

can be used?). Then, how to establish trusted connection, and what tools can you

use? Is it possible to transfer your software? Lastly, how to persevere

volatile evidence, how to get them and what next?

Brak komentarzy:

Prześlij komentarz