Redline is MANDIANT’s non-commercial tool for investigating hosts for any intrusion

activity, and malware. It analyzes files and memory, making a threat assessment

profile at the same time. In just a few

words great tool for live incident response, and data analysis. Instead of

manual live data acquisition (manual approach is a bad idea anyway), we can use

Redline Collector for volatile + non-volatile information collection –

possible adding IOC- and then quick analyze

on investigator machine also automatically (with support from white lists and MRI). At the same time, results can be confirmed using Memoryze or Volatility,

for more ‘controlled’ study. Below just quick description of Redline’s modules

or features.

Rapid

Triage:

Without

need for installation and alteration to the system state, one .bat script

can be run on the subject host. It audits all processes, driver, network connection.

What is more Redline Triage can be used for file structure capture and memory

dump. Great for live response for volatile data.

Reveals

Hidden Malware:

Collector is capable of memory imaging and deep study.

It works on the level of kernel, so malware(rootkits, hidden processes) that are present in the memory (possibly not on the disk) can be seen and become

obvious.

Guided

analysis:

Below description taken from guide, presents the

ideology of Redline, and purposes. The statement “takes the guesswork out of the task and time

allocation” is so true, and perfectly shows that the memory inspection or intrusion

detection process is not an easy task.

MANDIANT Redline streamlines memory analysis by providing a proven workflow for analyzing malware based on relative priority. This takes the guesswork out of task and time allocation, allowing investigators to provide a focused response to the threats that matter most. Redline calculates a “Malware Risk Index” that highlights processes more likely to be worth investigating, and encourages users to follow investigative steps that suggest how to start. As users review more audits from clean and compromised systems, they build up the experience to recognize malicious activity more quickly.

Investigative

Steps:

Another great knowledge goes from Madiant. It is

obvious, but I really like when developers and IT Security pros recommend training

and learning.

Redline can collect a daunting amount of raw information. The key to becoming an effective investigator is to review Redline data from a variety of “clean” and “compromised” systems. As you gain experience, you will learn to quickly identify suspicious patterns.

Malware

Risk Index Scoring and MD5 Whitelisting:

Redline

during deep analyze uses its rules and techniques to calculate MRI for each

task in the capture memory dump/ live data. That MRI uses different colors to

demonstrate risk. Of course there will be some false positives or false

negatives, but Redline gives also an easy way for tuning. Furthermore, majority

of processes are legitimate and standard. MANDIANT has made MD5 hashes for

multiple OS’es and its components.

Whitelisting allows to filter out tons of information that are known and

unaltered – definitely not interesting during intrusion detection process.

Indicator

of Compromise (IOCs):

mandiant_ioc_finder.exe

can be used to look for

IOCs in the subject system. MAndiant has developed an open standard(xml) for

defining and then sharing threat information.

Going well beyond static signature analysis, IOCs combine over 500 types of forensic evidence with grouping and logical operators to provide advanced threat detection capability.

We have several methods of data collection: Standard Collector/Comprehensive

Collector/ IOS search collector. Each of them can be modified and later provide

fast and predefined options for incident response. Memory image acquisition can

be applied in all of them. The ‘Analyze Data” section provide several options.

Simple we choose what we want to analyze (investigator workstation!) and what ‘external’

feeds should be added: do we have IOC report, or whitelist imported?

Last interesting option, nicely done:

"Analyze this Computer" option is offered only for training and demonstration purposes. It performs a robust acquisition and analysis of the local system, with options for saving the results. This is an great way to gain experience using Redline but is not recommended for investigations. For real-world use on multiple systems, follow the workflow for Using a Collector. It is important that Redline analysis be carried out on a clean and protected workstation: this is easily accomplished using the Collector to bring captured data from potentially compromised systems to a secure Redline workstation. Do not risk compromising your collected evidence!

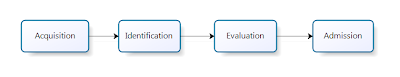

Workflow

Process delivered by Mandiant is rather straightforward

: collect, import and investigate. While collecting and importing are very easy …

investigation is also simple. We have several options when it comes for collecting (do not forget

about IOC finder). Here we need to create new ‘case’ and only launch RunRedlineAudit.bat. on the

compromised system. Please, remember about place for acquired data! Once data has been collected from

the host it must be imported and automatically saved

as .mans database file.

Investigation

Typical investigation steps contain reviewing: MRI Scores,

Network connections/ports, Memory Sections/DLLs, Handles, Hooks and drivers. Here,

it is worthy to mention, that when analyzing DLLs, Whitelists should be used.

Here we have also set of filters. What is more, we have a bunch of tools for

memory image study and acquisition. Furthermore we can acquire a driver.

Another great feature is The Timeline, which provides a time-ordered list of

events (use TimeWrinkle and Time Crunch for filtering). To sum up, we have a great tool with set of awesome features. For sure, I will spend days getting to know better the Redline - as it is complex solution (adding MIR) connecting incident response, malware response, intrusion detection and dead analysis. I strongly recommend reading the 'investigation' part from guide, also the appendix about best practises.

Source for manuals, whitepapers and software (+whitelist):

For more information about the IOC standard and IOC Finder, visit http://openioc.org/ and http://www.mandiant.com/resources/download/ioc-finder/